Brain3D: Generating 3D Objects from fMRI

Yuankun Yang, Li Zhang(Corresponding Author), Ziyang Xie,

Zhiyuan Yuan, Jianfeng Feng, Xiatian Zhu, Yu-Gang Jiang

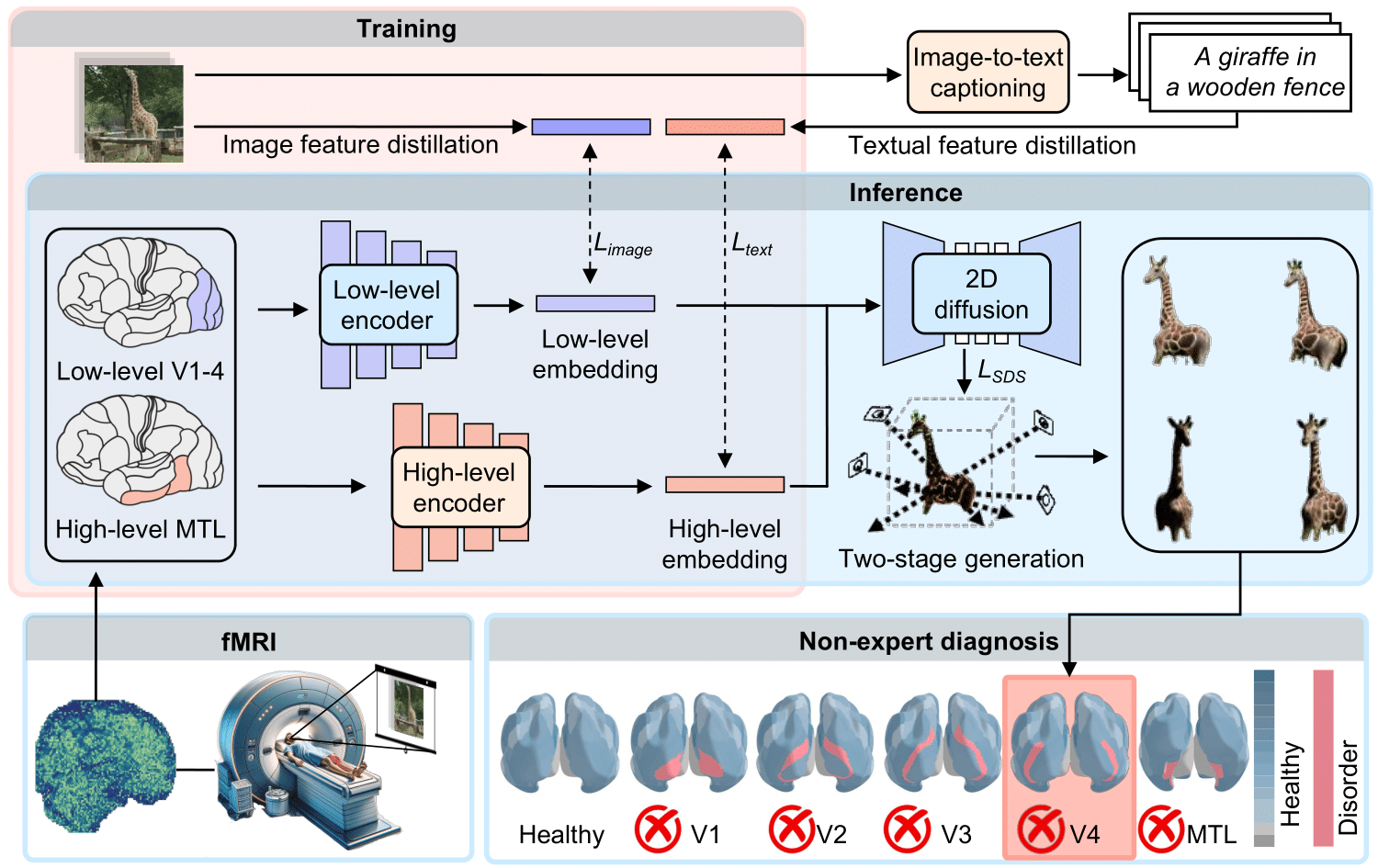

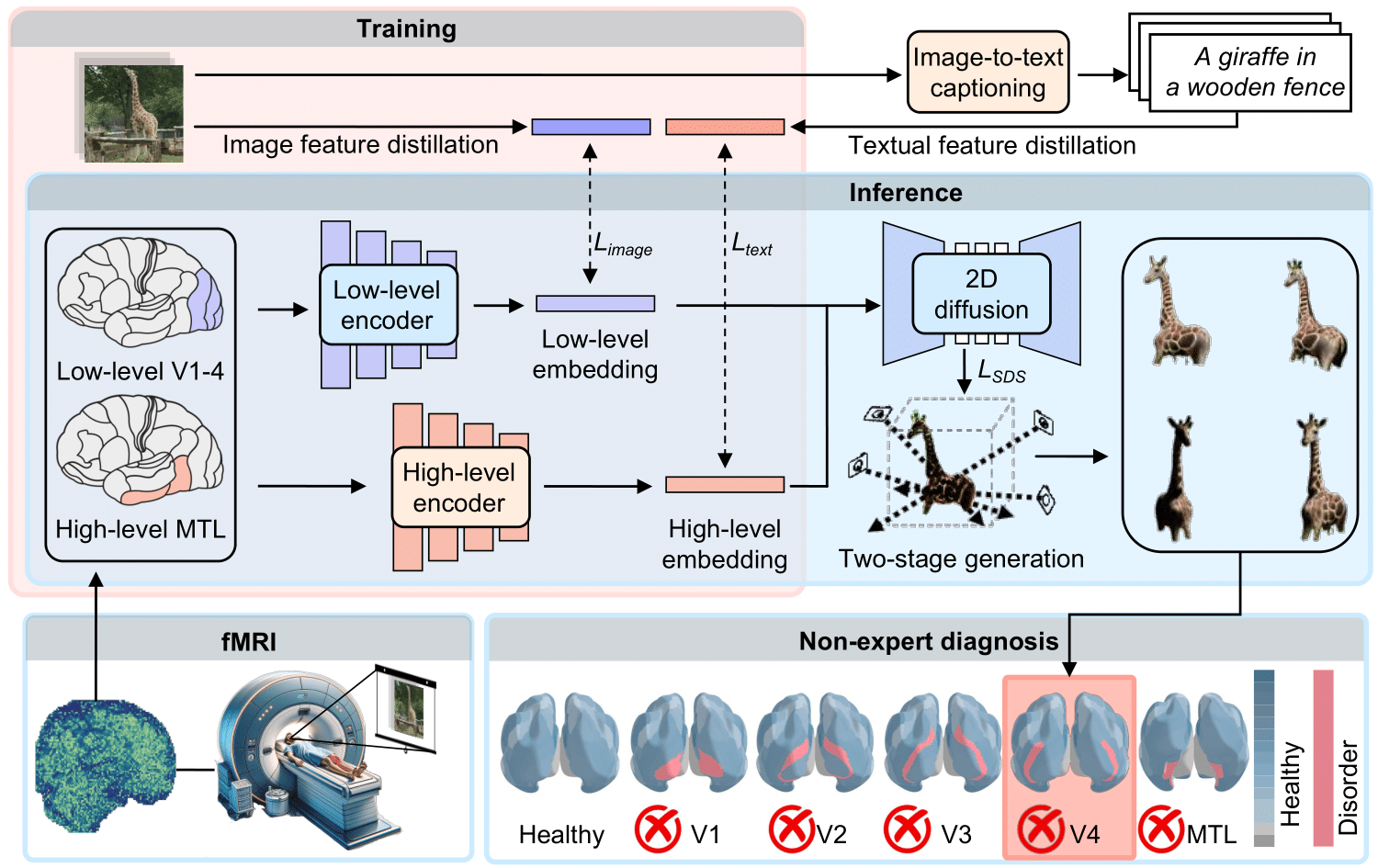

Understanding the hidden mechanisms behind human's visual perception is a fundamental quest in neuroscience, underpins a wide variety of critical applications, e.g. clinical diagnosis. To that end, investigating into the neural responses of human mind activities, such as functional Magnetic Resonance Imaging (fMRI), has been a significant research vehicle. However, analyzing fMRI signals is challenging, costly, daunting, and demanding for professional training. Despite remarkable progress in artificial intelligence (AI) based fMRI analysis, existing solutions are limited and far away from being clinically meaningful. In this context, we leap forward to demonstrate how AI can go beyond the current state of the art by decoding fMRI into visually plausible 3D visuals, enabling automatic clinical analysis of fMRI data, even without healthcare professionals. Innovationally, we reformulate the task of analyzing fMRI data as a conditional 3D scene reconstruction problem. We design a novel cross-modal 3D scene representation learning method, \ourmodel, that takes as input the fMRI data of a subject who was presented with a 2D object image, and yields as output the corresponding 3D object visuals. Importantly, we show that in simulated scenarios our AI agent captures the distinct functionalities of each region of human vision system as well as their intricate interplay relationships, aligning remarkably with the established discoveries of neuroscience. Non-expert diagnosis indicate that our model can successfully identify the disordered brain regions, such as V1, V2, V3, V4, and the medial temporal lobe (MTL) within the human visual system. We also present results in cross-modal 3D visual construction setting, showcasing the perception quality of our 3D scene generation.

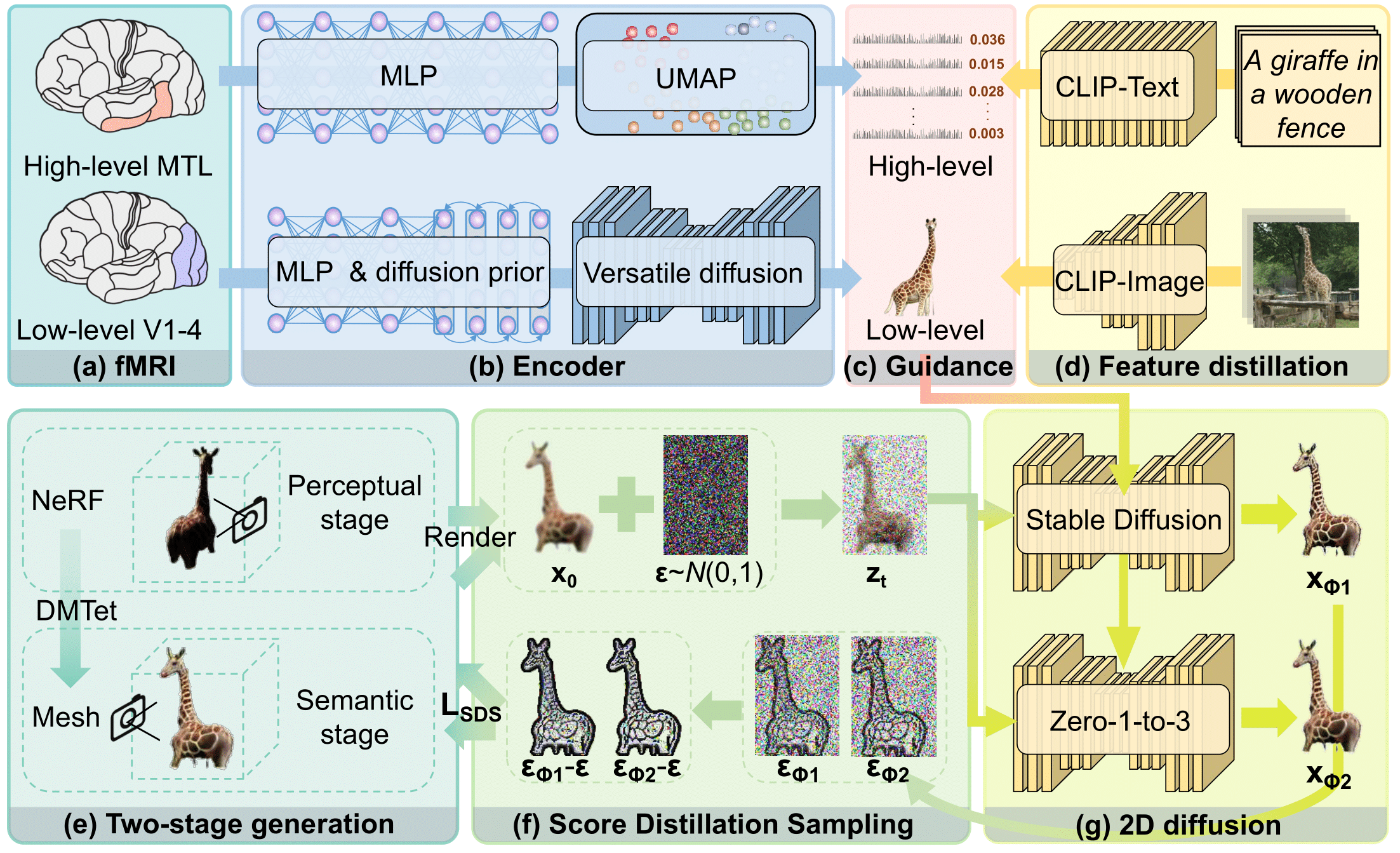

To test the effectiveness of Brain3D in 3D generation, we perform comprehensive comparisons with an existing 2D method. From the fMRI data Brain3D extracts rich appearance, semantic and geometric information, presenting a high degree of consistency with the stimuli images. Despite limited observation with the giraffe, skier and teddy bear in the three cases (see the last two rows), our bio-inspired model can process 3D details and comprehend their semantics beyond what is immediately visible in the 2D image, achieving finer semantic details than MindEye1. This result underscores the remarkable human's neural processing capability in constructing intricate 3D structures by interpreting and internalizing 3D geometric information from 2D visual stimuli.

images

3D objects from fMRI

images

3D objects from fMRI

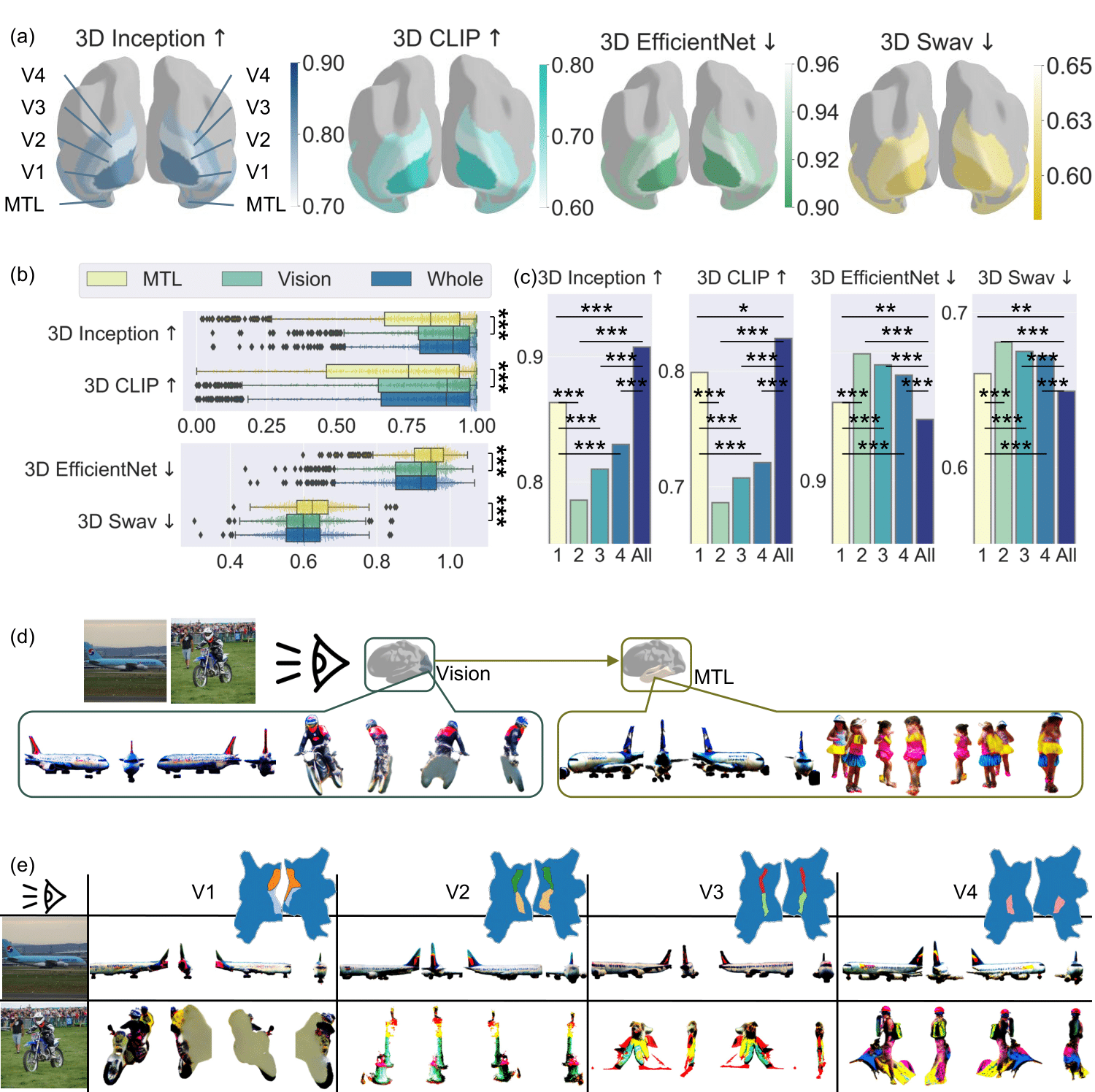

We investigate whether our model presents biological consistency in terms of regional functionalities.

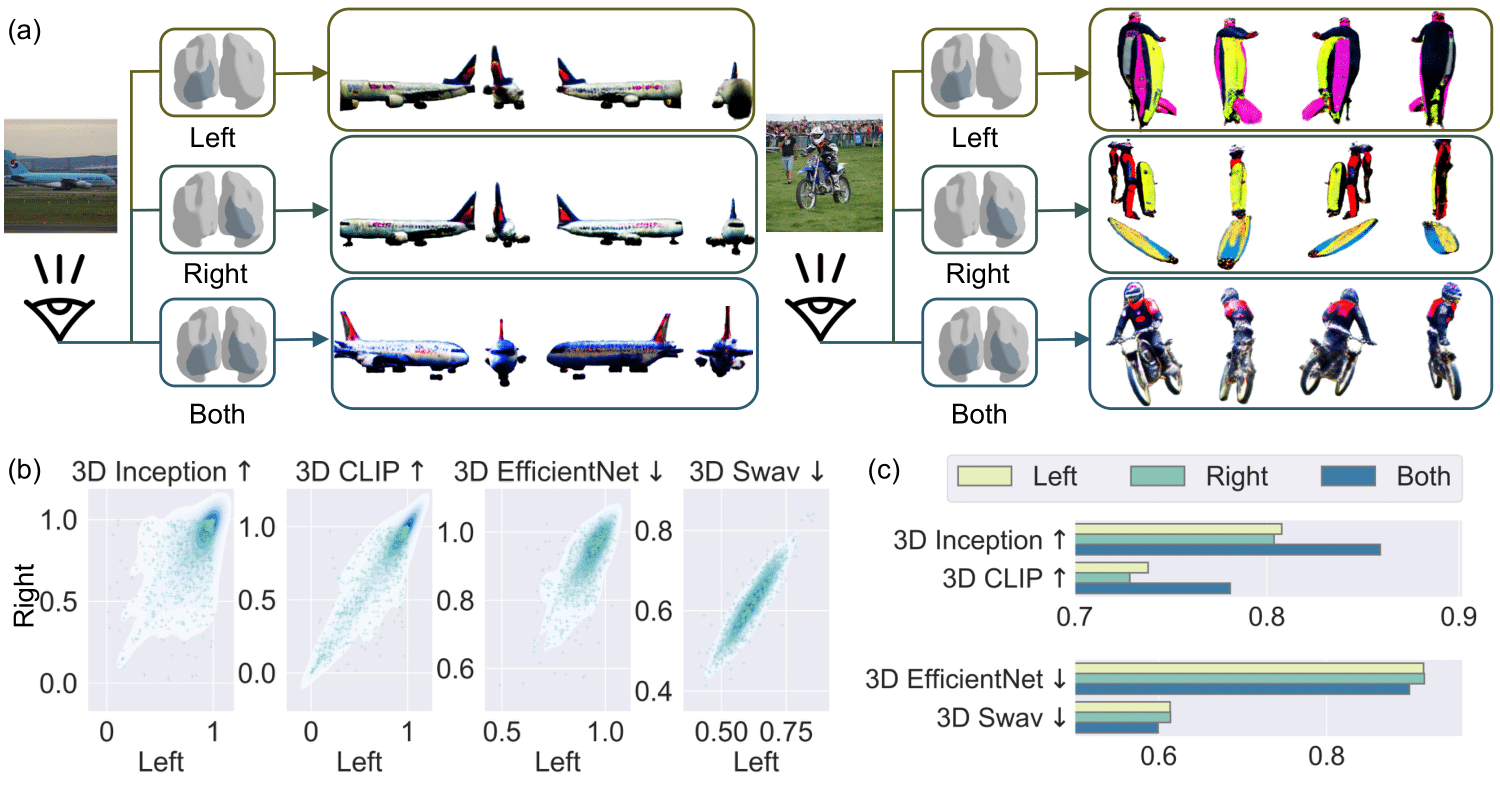

We first examine the roles of the left and right brain hemispheres, as well as their collaboration during the 3D object reconstruction process.

Specifically, we observe that the two hemispheres make different holistic contributions to object perception, due to exhibiting distinct performances for specific objects. The left hemisphere tends to depict finer details and intricate structures in object visualization, while the right hemisphere better captures the overall shape and silhouette. These results align with previous discoveries that the left hemisphere is involved in detail-oriented tasks while the right hemisphere in more holistic tasks23.

We further investigate the functionalities of the various visual regions and the medial temporal lobe (MTL) region. Our research shows that the visual cortex exhibits higher visual decoding ability than MTL region. The significance level refers to the probability of error when rejecting the null hyothesis that visual cortex shares the similar performance as MTL region. Specifically, the visual cortex captures local fine-grained visual information such as the ``windows'' of an airplane and the ``motorcycle rider'', while the MTL region captures holistic shapes like ``airplane skeleton'' and ``person''. This visualization highlights higher-level 3D-shape-related concepts while foregoing pixel-level details. This finding provides a novel perspective on how the MTL region may contribute to the formation and recall of long-term memories, emphasizing its role in abstracting complex visual information. V1 region exhibits predominant ability in visual representation among various visual regions. To further break down, V1 region plays a pivotal role in handling elementary visual information, including edges, features, and color. V2 and V3 regions play a role in synthesizing various features into complex visual forms, while V4 region focuses on basic color process at coarse level. However, our research brings to light a nuanced understanding of the interdependence within the visual cortex. Specifically, we demonstrate the reliance of the V2, V3, and V4 regions on the V1 region for constructing 3D vision. This is evident from the disrupted 3D geometries. This reliance likely stems from the fact that these regions process information initially received by V14, indicating a foundational dependence on V1 for effective visual processing. These findings emphasize the collaborative and interactive roles of these brain regions in forming a comprehensive visual representation. This result is thus biologically consistent to great extent.

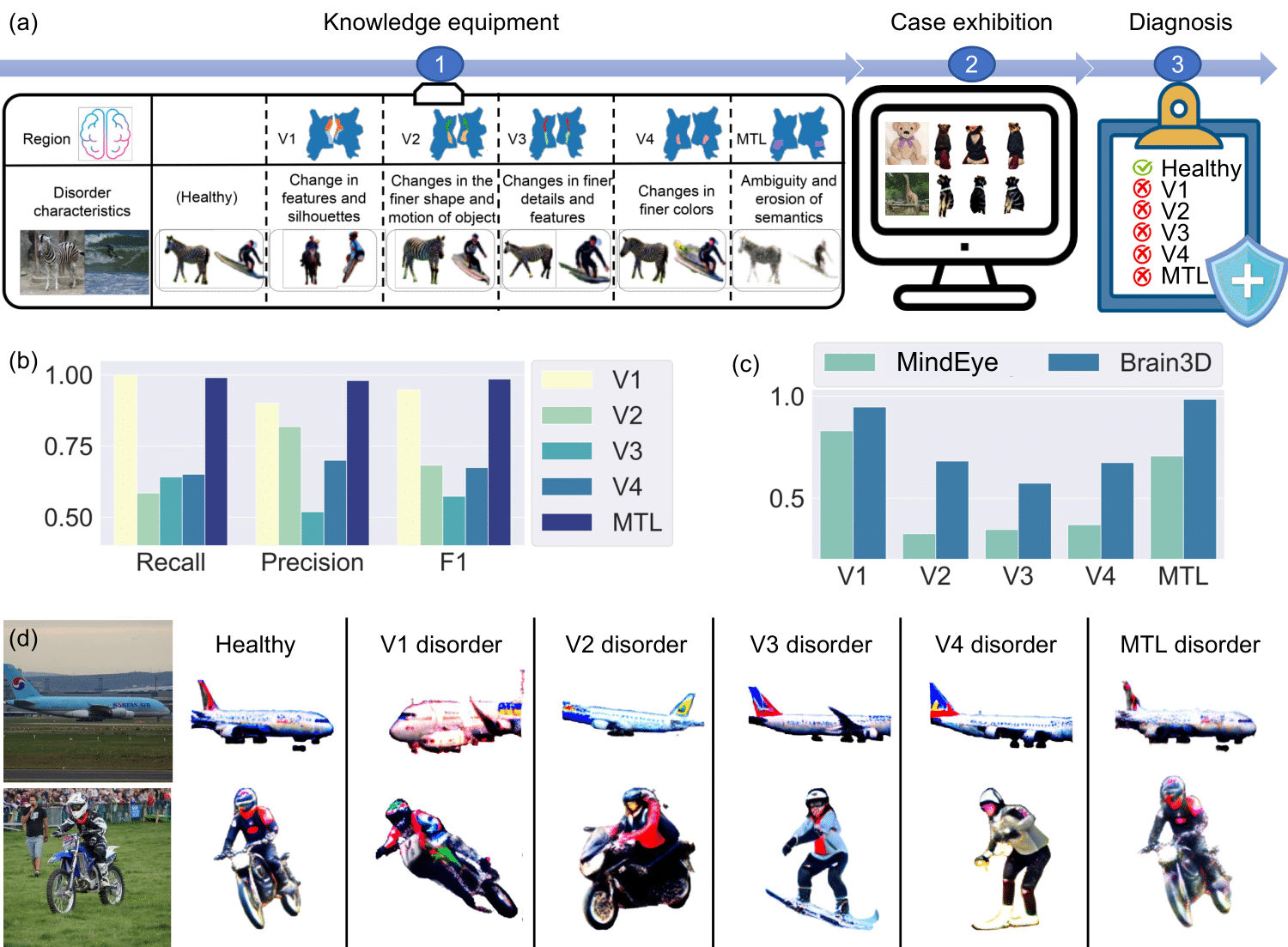

Motivated by the high biological consistency in terms of regional functions, we examine the usefulness of Brain3D in diagnosing disordered brain regions by designing a series of simulation based experiments. Notably, almost perfect diagnosis is achieved for the disorders with the V1 and MTL regions. V1 disorder tends to cause significant distortion to the output, consistent with the earlier finding about its critical role in processing basic visual information. Disorder in V2 region leads to changes in the detailed shape and motion of objects, e.g., orientation of the airplane and shape of the motorcycle. V3 region primarily affects the details and movements, e.g., the change from motorcycle to rider. Disorder in V4 region might cause color shift and variation. Instead, MTL's disorder could be concerned with high-level semantics, e.g., increased ambiguity in object appearance.

We also present more videos of 3D objects reconstructed through our Brain3D by decoding fMRI of participants, which offers a thorough overview of 3D details. It may takes a few seconds for our website to load videos.

images

3D objects from fMRI

images

3D objects from fMRI